Databases

CDC and query-based extraction from all major databases

Smooth pipelines with in-depth integration

Connect Etleap ETL to your application, database, file store, or event stream.

View the data entities available in your source, and select which ones to ingest.

Select the location within your warehouse or lake where Etleap ETL will load the data.

Etleap ETL lets you ingest data into your data warehouse or lake in minutes. It provides an intuitive, user-friendly interface that lets you preview, configure, and operate your pipelines, all without writing a line of code.

Etleap ETL is cloud-native and shines in integrating into Redshift and Snowflake data warehouses and Amazon S3 data lakes. It covers all types of sources, including event streams, advanced change-data-capture (CDC) functionality for databases, and the ability to incorporate less common, custom sources.

Etleap ETL includes an extensive list of data sources for plug-and-play, no-code pipeline creation. These span across databases, files, event streams, applications and more.

Etleap-supported sources typically cover the vast majority of customers’ data sources. When you select a data integration tool, though, it doesn’t matter if the list has 1,000 different sources if your key data source isn’t included. Etleap ETL is architected for fast expansion of sources and will build one within weeks if you need a new integration.

CDC and query-based extraction from all major databases

Native integrations for web-based and on-premises applications

Tame semi-structured data from S3, SFTP, and other file stores

Ingest events from webhooks, Kafka, and more, with 99.99% availability

CDC and query-based extraction from all major databases

Native integrations for web-based and on-premises applications

Tame semi-structured data from S3, SFTP, and other file stores

Ingest events from webhooks, Kafka, and more, with 99.99% availability

Customers adopt a centralized data repository to reduce complexity, consolidate analytics, and build organizational trust in data.

Whether it’s a cloud data warehouse or data lake, customers are wise to select an ingest tool that tightly integrates with that destination. As a cloud-native platform, Etleap has invested in this deep integration for Redshift, Snowflake, and S3.

As the popularity of Amazon Redshift and Snowflake has taken off, Etleap’s tight integrations let customers quickly get value from their data.

Etleap is a proud AWS Select partner that works closely with the Redshift product team. Etleap enables customers to fully utilize even the newest Redshift features such as Streaming Ingestion, Data Sharing, and Materialized Views.

Etleap is a proud Snowflake Ready partner, available on Partner Connect. The Etleap ETL Snowflake integration is built to take advantage of the power of Snowflake, managing compute resources to minimize ETL costs.

Where many integration products can help fill data lakes, Etleap ETL also makes your ingested data usable for analytics and data products. It integrates tightly with S3 and AWS Glue Catalog, and Etleap’s extensible architecture lets customers integrate Data Quality tools into their pipelines and populate external Data Catalog tools with schemas and data lineage.

data lake

data lake AWS Glue Data Catalog

AWS Glue Data Catalog Amazon S3

Amazon S3Where many integration products can help fill data lakes, Etleap ETL also makes your ingested data usable for analytics and data products. It integrates tightly with S3 and AWS Glue Catalog, and Etleap’s extensible architecture lets customers integrate Data Quality tools into their pipelines and populate external Data Catalog tools with schemas and data lineage.

It’s great that data lakes can store most of your raw data, but when data is left fragmented, analytic processing is slow and difficult. Etleap ETL generates Snappy-compressed Parquet files, creates corresponding Glue Catalog tables, and updates these tables as incremental loads happen. This brings structure to the data in the lake, makes it analytics-ready, and accelerates query performance.

Etleap ETL further improves lake usability with support for snapshots, user-defined partitions, and incremental schema changes. Snapshots ensure queries run on data closer to the size of source data rather than a much larger data set of all incremental changes. User-defined partitions help organize data and improve query performance. Incremental schema change support means users don’t have to manually update the lake’s metadata to incorporate new and removed columns.

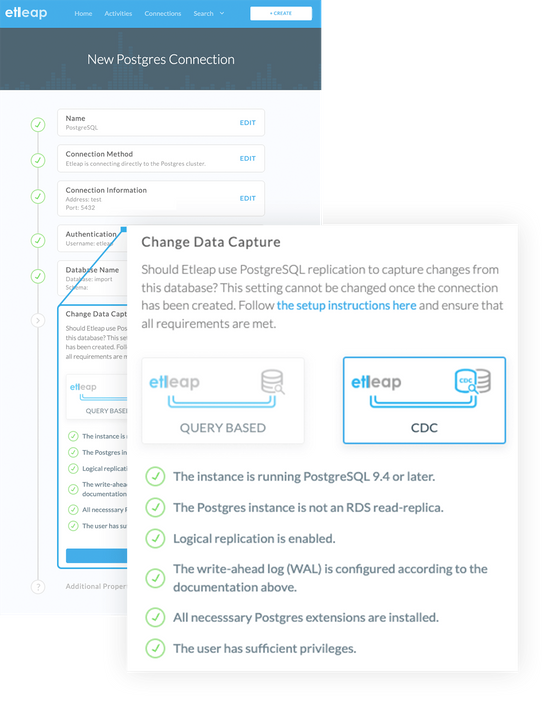

Providing comprehensive operational database data into a downstream hub is not straightforward. Extraction must minimize its impact on the database yet still constantly keep the downstream repository up to date. Query-based and batch approaches satisfy some cases but are not complete or fast enough for most data teams. Change Data Capture (CDC) enables fast availability of current data, and Etleap ETL delivers CDC functionality that customers can use with ease.

Etleap ETL manages the end-to-end CDC pipelines for customers, utilizing AWS Database Migration Service (AWS DMS) to provide enterprise-grade CDC from all major databases, including MySQL, PostgreSQL, SQL Server, and more. Etleap ETL streamlines setup, validating that source databases are properly configured and identifying the gaps if not. DMS receives the database replication log streams, and Etleap manages populating the downstream destination.

Etleap ETL abstracts away the challenges of CDC to make it accessible to both engineers and analysts, providing end-to-end setup in under five minutes. The primary user activity is simply selecting individual database tables. Etleap ETL also makes pipeline management easy. It identifies any abnormalities in CDC pipelines and gives the user simple resolution steps without requiring any user interaction with DMS.

eMoney generates an abundance of customer data points — from Outreach, Jira, Zuora, Salesforce, Mixpanel, and more. Etleap is the ETL solution that centralizes this data, giving them unprecedented access to insights across these sources and enabling collaboration among customer, business operations, and product teams.

Moderna uses the easy Etleap user interface to build pipelines from S3 buckets, file stores, and hundreds of databases like SQL Server, Postgres and MongoDB. Most of these were available “out of the box” with Etleap including CDC for the databases, and Etleap quickly added integration support for Moderna’s non-standard sources.

You’re only moments away from a better way of doing ETL. We have expert, hands-on data engineers at the ready, 30-day free trials, and the best data pipelines in town, so what are you waiting for?